Journal of Machine Learning Research 25 (2024) 1-62 Submitted 11/23; Revised 4/24; Published 5/24

Optimal Locally Private Nonparametric Classification

with Public Data

School of Statistics

Renmin University of China

100872 Beijing, China

Center for Applied Statistics, School of Statistics

Renmin University of China

100872 Beijing, China

Editor: Po-Ling Loh

Abstract

In this work, we investigate the problem of public data assisted non-interactive Lo-

cal Differentially Private (LDP) learning with a focus on non-parametric classification.

Under the posterior drift assumption, we for the first time derive the mini-max optimal

convergence rate with LDP constraint. Then, we present a novel approach, the locally

differentially private classification tree, which attains the mini-max optimal convergence

rate. Furthermore, we design a data-driven pruning procedure that avoids parameter tun-

ing and provides a fast converging estimator. Comprehensive experiments conducted on

synthetic and real data sets show the superior performance of our proposed methods. Both

our theoretical and experimental findings demonstrate the effectiveness of public data com-

pared to private data, which leads to practical suggestions for prioritizing non-private data

collection.

Keywords: Decision Tree, Local Differential Privacy, Non-parametric Classification,

Posterior Drift, Public Data

1. Introduction

Local differential privacy (LDP) (Kairouz et al., 2014; Duchi et al., 2018), which is a variant

of differential privacy (DP) (Dwork et al., 2006), has gained considerable attention in recent

years, particularly among industrial developers (Erlingsson et al., 2014; Tang et al., 2017).

Unlike central DP, which relies on a trusted curator who has access to the raw data, LDP

assumes that each sample is possessed by a data holder, and each holder privatizes their

data before it is collected by the curator. Although this setting provides stronger protection,

learning with perturbed data requires more samples (Duchi et al., 2018) compared to the

central setting. Moreover, basic techniques such as principal component analysis (Wang and

Xu, 2020; Bie et al., 2022), data standardization (Bie et al., 2022), and tree partitioning (Wu

et al., 2022) are troublesome or even prohibited. Consequently, LDP introduces challenges

for various machine learning tasks that are otherwise considered straightforward, including

c

2024 Yuheng Ma and Hanfang Yang.

License: CC-BY 4.0, see https://creativecommons.org/licenses/by/4.0/. Attribution requirements are provided

at http://jmlr.org/papers/v25/23-1563.html.

Ma and Yang

density estimation (Duchi et al., 2018), mean estimation (Duchi et al., 2018), Gaussian

estimation (Joseph et al., 2019), and change-point detection (Berrett and Yu, 2021).

Fortunately, in certain scenarios, private estimation performance can be enhanced with

an additional public data set. From an empirical perspective, numerous studies demon-

strated the effectiveness of using public data (Papernot et al., 2017, 2018; Yu et al., 2021b,

2022; Nasr et al., 2023; Gu et al., 2023). In most cases, the public data is out-of-distribution

and collected from other related sources. Yet, though public data from an identical distri-

bution has been extensively studied (Bassily et al., 2018; Alon et al., 2019; Kairouz et al.,

2021; Amid et al., 2022; Ben-David et al., 2023; Lowy et al., 2023; Wang et al., 2023a),

only a few works systematically described the out-of-distribution relationship. Focusing on

unsupervised problems, i.e. Gaussian estimation, Bie et al. (2022) discussed the gain of

using public data with respect to the total variation distance between private and public

data distributions. As for supervised learning, Gu et al. (2023) discussed the similarities

between data sets to guide the selection of appropriate public data. Ma et al. (2023) lever-

aged public data to create informative partitions for LDP regression trees. Neither of them

discussed the relationship between regression functions of private and public data distribu-

tions. Aiming at the gap between theory and practice in supervised private learning with

public data, we pose the following intriguing questions:

1. Theoretically, when is labeled public data beneficial for LDP learning?

2. Under such conditions, how to effectively leverage public data?

Hyper-parameter tuning is an essential yet challenging task in private learning (Papernot

and Steinke, 2021; Mohapatra et al., 2022) and remains an unsolved problem in local differ-

ential privacy. Common strategies, such as cross-validation and information criteria, remain

unavailable due to their requirement for multiple queries to the training data. Tuning by

omitting a validation set is restrictive in terms of the size of the potential hyperparameter

space (Ma et al., 2023). Moreover, leveraging public data necessitates more complex mod-

els, which often results in an increased number of hyperparameters to tune. Consequently,

models with public data may face difficulties in parameter selection, which leads to the

third consideration:

3. Can data-driven hyperparameter tuning approaches be derived?

In this work, we answer the above questions from the perspective of non-parametric

classification with the non-interactive LDP constraint. Though parametric methods enjoy

better privacy-utility trade-off (Duchi et al., 2018) under certain assumptions, they are

vulnerable to model misspecification. Under LDP setting where we have limited prior

knowledge of the data, non-parametric methods ensure the worst-case performance. As

for private classification, the local setting remains rarely explored compared to the central

setting. A notable reason is that most gradient-based methods (Song et al., 2013; Abadi

et al., 2016) are prohibited due to the high demand for memory and communication capacity

on the terminal machine (Tram`er et al., 2022). We consider non-interactive LDP, where the

communication between the curator and the data holders is limited to a single round. This

type of method is favored by practitioners (Smith et al., 2017; Zheng et al., 2017; Daniely

2

Optimal Locally Private Nonparametric Cla ssification with Public Data

and Feldman, 2019) since they avoid multi-round protocols that are prohibitively slow in

practice due to network latency.

Under such background, we summarize our contributions by answering the questions:

• For the first question, we propose to adopt the framework of posterior drift, a setting

in transfer learning (Cai and Wei, 2021), for our analysis. Specifically, given the target

distribution P and the source distribution Q, we require P

X

= Q

X

, i.e. they have

the same marginal distributions. Moreover, their Bayes decision rule are identical, i.e.

(P(Y = 1|X) −1/2) · (Q(Y = 1|X) −1/2) ≥ 0. The assumption covers a wide range

of distributions and includes P = Q as a special case. Theoretically, we for the first

time establish a mini-max lower bound for nonparametric LDP learning with public

data.

• To answer the second question, we propose the Locally differentially Private Classifi-

cation Tree (LPCT), a novel algorithm that leverages both public and private data.

Specifically, we first create a tree partition on public data and then estimate the re-

gression function through a weighted average of public and private data. Besides

inheriting the merits of tree-based models such as efficiency, interpretability, and ex-

tensivity to multiple data types, LPCT is superior from both theoretical and empirical

perspectives. Theoretically, we show that LPCT attains the mini-max optimal con-

vergence rate. Empirically, we conduct experiments on both synthetic and real data

sets to show the effectiveness of LPCT over state-of-the-art competitors.

• To answer the last question, we propose the Pruned Locally differentially Private

Classification Tree (LPCT-prune), a data-driven classifier that is free of parameter

tuning. Specifically, we query the privatized information with a sufficient tree depth

and conduct an efficient pruning procedure. Theoretically, we show that LPCT-prune

achieves the optimal convergence rate in most cases and maintains a reasonable con-

vergence rate otherwise. Empirically, we illustrate that LPCT-prune with a default

parameter performs comparable to LPCT with the best parameters.

This article is organized as follows. In Section 2, we provide a comprehensive literature

review. Then we formally define our problem setting and introduce our methodology in

Section 3. The obtained theoretical results are presented in Section 4. Then we develop

the data-driven estimator in Section 5. Extensive experiments are conducted on synthetic

data and real data in Section 6 and 7 respectively. The conclusion and discussions are in

Section 8.

2. Related Work

2.1 Private Learning with Public Data

Leveraging public data in private learning is a research topic that is both theoretically and

practically meaningful to closing the gap between private and non-private methods. There

is a long list of works addressing the issue of private learning with public data from a cen-

tral differential privacy perspective. Through unlabeled public data, Papernot et al. (2017,

2018) fed knowledge privately into student models, whose theoretical benefits are estab-

lished by Liu et al. (2021). Empirical investigations have demonstrated the effectiveness of

3

Ma and Yang

pretraining on public data and fine-tuning privately on sensitive data (Li et al., 2022; Yu

et al., 2022; Ganesh et al., 2023; Yu et al., 2023). Using the information obtained in public

data, Wang and Zhou (2020); Kairouz et al. (2021); Yu et al. (2021a); Zhou et al. (2021);

Amid et al. (2022); Nasr et al. (2023) investigated preconditioning or adaptively clipping

the private gradients, which reduce the required amount of noise in DP-SGD and accel-

erate its convergence. Theoretical works such as Bassily et al. (2018); Alon et al. (2019);

Bassily et al. (2020) studied sample complexity bounds for PAC learning and query release

based on the VC dimension of the function space. Bie et al. (2022) used public data to

standardize private data and showed that the sample complexity of Gaussian mean esti-

mation can be augmented in the sense of the range parameter. More recently, by relating

to sample compression schemes, Ben-David et al. (2023) presented sample complexities for

several problems including Gaussian mixture estimation. Ganesh et al. (2023) explained

the necessity of public data by investigating the loss landscape.

In contrast, there is less attention focused on the local setting. Su et al. (2023) consid-

ered half-space estimation with an auxiliary unlabeled public data. They construct several

weak LDP classifiers and label the public data by majority vote. They established sample

complexity that is linear in the dimension and polynomial in other terms for both private

and public data. Wang et al. (2023a) employed public data to estimate the leading eigen-

value of the covariance matrix, which will serve as a reference for data holders to clip their

statistics. Given the learned clipping threshold, the sample complexity for GLM with LDP

for a general class of functions is improved to polynomial concerning error, dimension, and

privacy budget, which is shown impossible without public data (Smith et al., 2017; Dagan

and Feldman, 2020). Both Wang et al. (2023a) and Su et al. (2023) considered the un-

labeled data, while our work further leverages the information contained in the labels if

labeled public data is available. More recently, Ma et al. (2023) enhanced LDP regression

by using public data to create an informative partition. However, the relationship between

the source and target distributions is vaguely defined. Also, their theoretical results are no

better than using just private data. Lowy et al. (2023) studied mean estimation, DP-SCO,

and DP-ERM with public data. They assume that the public data and the private data

are identically distributed. Also, the theoretical results are established under sequentially-

interactive LDP. Despite the distinctions in the research problem, their conclusions are

analogous to ours: the asymptotic optimal error can be achieved by using either the private

or public estimation solely, while weighted average estimation achieves better constant as

well as empirical performance.

2.2 Locally Private Classification

LDP classification with parametric assumptions can be viewed as a special case of LDP-

ERM (Smith et al., 2017; Zheng et al., 2017; Wang et al., 2018; Daniely and Feldman, 2019;

Dagan and Feldman, 2020; Wang et al., 2023a). Most works provide evidence of hardness on

non-interactive LDP-ERM. Non-parametric methods possess a worse privacy-utility trade-

off (Duchi et al., 2018) and are considered to be harder than parametric problems. Zheng

et al. (2017) investigated the kernel ridge regression and established a bound of estimation

error of the order n

−1/4

. Their results only apply to losses that are strongly convex and

smooth. The works most related to ours is that of Berrett and Butucea (2019); Berrett

4

Optimal Locally Private Nonparametric Cla ssification with Public Data

et al. (2021). Berrett and Butucea (2019) studied non-parametric classification with the

h¨older smoothness assumption. They proposed a histogram-based method using the Laplace

mechanism and showed that this approach is mini-max optimal under their assumption. The

strong consistency of this approach is established in Berrett et al. (2021) as a by-product.

Our results include their conclusion as a special case and hold in a stronger sense, i.e.

“uniformly with high probability” instead of “in expectation”.

2.3 Private Hyperparameter Tuning

Researchers highlighted the importance and difficulty of parameter tuning under DP con-

straint (Papernot and Steinke, 2021; Wang et al., 2023b) focusing on central DP. Chaudhuri

and Vinterbo (2013) explored this problem under strong stability assumptions. Liu and Tal-

war (2019) presented DP algorithms for selecting the best parameters in several candidates.

Compared to the original algorithm, their method suffers a factor of 3 in the privacy param-

eter, meaning that 2/3 of the privacy budget is spent on the selection process. Papernot

and Steinke (2021) improved the result with a much tighter privacy bound in Renyi-DP

by running the algorithm random times and returning the best result. Recently, Wang

et al. (2023b) proposed a framework that reduces DP parameter selection to a non-DP

counterpart. This approach leverages existing hyperparameter optimization methods and

outperforms the uniform sampling approaches (Liu and Talwar, 2019; Papernot and Steinke,

2021). Ramaswamy et al. (2020) proposed to tune hyperparameters on public data sets.

Mohapatra et al. (2022) argued that, under a limited privacy budget, DP optimizers that re-

quire less tuning are preferable. As far as we know, there is a lack of research focusing on the

LDP parameter selection. Butucea et al. (2020) investigated adaptive parameter selection

in non-parametric density estimation, and provided a data-driven wavelet estimator.

3. Locally Differentially Private Classification Tree

In this section, we introduce the Locally differentially Private Classification Tree (LPCT).

After explaining necessary notations and problem setting in Section 3.1, we propose our

decision tree partition rule in Section 3.2. Finally, Section 3.3 introduces our privacy mech-

anism and proposes the final algorithm.

3.1 Problem Setting

We introduce necessary notations. For any vector x, let x

i

denote the i-th element of

x. Recall that for 1 ≤ p < ∞, the L

p

-norm of x = (x

1

, . . . , x

d

) is defined by kxk

p

:=

(|x

1

|

p

+···+|x

d

|

p

)

1/p

. Let x

i:j

= (x

i

, ··· , x

j

) be the slicing view of x in the i, ··· , j position.

Throughout this paper, we use the notation a

n

. b

n

and a

n

& b

n

to denote that there exist

positive constant c and c

0

such that a

n

≤ cb

n

and a

n

≥ c

0

b

n

, for all n ∈ N. In addition,

we denote a

n

b

n

if a

n

. b

n

and b

n

. a

n

. Let a ∨ b = max(a, b) and a ∧ b = min(a, b).

Besides, for any set A ⊂ R

d

, the diameter of A is defined by diam(A) := sup

x,x

0

∈A

kx−x

0

k

2

.

Let f

1

◦ f

2

represent the composition of functions f

1

and f

2

. Let A × B be the Cartesian

product of sets where A ∈ X

1

and B ∈ X

2

. For measure P on X

1

and Q on X

2

, define the

product measure P ⊗ Q on X

1

× X

2

as P ⊗ Q(A × B) = P(A) · Q(B). For an integer k,

5

Ma and Yang

denote the k-fold product measure on X

k

1

as P

k

. Let the standard Laplace random variable

have probability density function e

−|x|

/2 for x ∈ R.

It is legitimate to consider binary classification while an extension of our results to

multi-class classification is straightforward. Suppose there are two unknown probability

measures, the private measure P and the public measure Q on X ×Y = [0, 1]

d

×{0, 1}. We

observe n

P

i.i.d. private samples D

P

= {(X

P

1

, Y

P

1

), . . . , (X

P

n

P

, Y

P

n

P

)} drawn from the target

distribution P, and n

Q

i.i.d. public samples D

Q

= {(X

Q

1

, Y

Q

1

), . . . , (X

Q

n

Q

, Y

Q

n

Q

)} drawn from

the source distribution Q. The data points from the distributions P and Q are also mutually

independent. Our goal is to classify under the target distribution P. Given the observed

data, we construct a classifier

b

f : X → Y which minimizes the classification risk under the

target distribution P :

R

P

(

b

f) , P

(X,Y )∼P

(Y 6=

b

f(X)).

Here P

(X,Y )∼P

(·) means the probability when (X, Y ) are drawn from distribution P. The

Bayes risk, which is the smallest possible risk with respect to P, is given by R

∗

P

:=

inf{R

P

(f)|f : X → Y is measurable}. In such binary classification problems, the regression

functions are defined as

η

∗

P

(x) , P(Y = 1 | X = x) and η

∗

Q

(x) , Q(Y = 1 | X = x), (1)

which represent the conditional distributions P

Y |X

and Q

Y |X

. The function that achieves

Bayes risk with respect to P is called Bayes function, namely, f

∗

P

(x) := 1 (η

∗

P

(x) > 1/2).

We consider the following setting. The estimator

b

f is considered as a random function

with respect to both D

P

and D

Q

, while its construction process with respect to D

P

is locally

differentially private (LDP). The rigorous definition of LDP is as follows.

Definition 1 (Local Differential Privacy) Given data {(X

P

i

, Y

P

i

)}

n

P

i=1

, a privatized in-

formation Z

i

, which is a random variable on S, is released based on (X

P

i

, Y

P

i

) and Z

1

, ··· , Z

i−1

.

Let σ(S) be the σ-field on S. Z

i

is drawn conditional on (X

P

i

, Y

P

i

) and Z

1:i−1

via the

distribution R

i

S | X

P

i

= x, Y

P

i

= y, Z

1:i−1

= z

1:i−1

for S ∈ σ(S). Then the mechanism

R = {R

i

}

n

P

i=1

is sequentially-interactive ε-locally differentially private (ε-LDP) if

R

i

S | X

P

i

= x, Y

P

i

= y, Z

1:i−1

= z

1:i−1

R

i

S | X

P

i

= x

0

, Y

P

i

= y

0

, Z

1:i−1

= z

1:i−1

≤ e

ε

for all 1 ≤ i ≤ n

P

, S ∈ σ(S), x, x

0

∈ X, y, y

0

∈ Y, and z

1:i−1

∈ S

i−1

. Moreover, if

R

i

S | X

P

i

= x, Y

P

i

= y

R

i

S | X

P

i

= x

0

, Y

P

i

= y

0

≤ e

ε

for all 1 ≤ i ≤ n

P

, S ∈ σ(S), x, x

0

∈ X, y, y

0

∈ Y, then R is non-interactive ε-LDP.

This formulation is widely adopted (Duchi et al., 2018; Berrett and Butucea, 2019). In

contrast to central DP where the likelihood ratio is taken concerning some statistics of all

data, LDP requires individuals to guarantee their own privacy by considering the likelihood

ratio of each (X

P

i

, Y

P

i

). Once the view z is provided, no further processing can reduce

6

Optimal Locally Private Nonparametric Cla ssification with Public Data

the deniability about taking a value (x, y) since any outcome z is nearly as likely to have

come from some other initial value (x

0

, y

0

). Sequentially-interactive mechanisms fulfill the

requirements for a wide range of methods including gradient-based approaches. However,

they can prove to be prohibitively slow in practice. Conversely, non-interactive mechanisms

are quite efficient but suffer from poorer performance (Smith et al., 2017). Our analysis

does not encompass the more general fully-interactive mechanisms due to their complex

nature (Duchi et al., 2018).

Besides the privacy guarantee of P data, we consider the existence of additional pub-

lic data from distribution Q. To depict the relationship between P and Q, we consider

the posterior drift setting in transfer learning literature (Cai and Wei, 2021). Under the

posterior drift model, let P

X

= Q

X

be the identical marginal distribution. The main dif-

ference between P and Q lies in the regression functions η

∗

P

(x) and η

∗

Q

(x). Specifically, let

η

∗

Q

(x) = φ (η

∗

P

(x)) for some strictly increasing link function φ(·) with φ (1/2) = 1/2. The

condition that φ is strictly increasing leads to the situation where those X that are more

likely to be labeled Y = 1 under P are more likely to be labeled Y = 1 under Q. The

assumption φ (1/2) = 1/2 guarantees that those X that are non-informative under P are

the same under Q, and more importantly, the sign of η

∗

P

− 1/2 and η

∗

Q

− 1/2 are identical.

3.2 Decision Tree Partition

In this section, we formalize the decision tree partition process. A binary tree partition

π is a disjoint cover of X obtained by recursively split grids into subgrids. While our

methodology applies to any tree partition, it can be challenging to use general partitions

such as the original CART (Breiman, 1984) for theoretical analysis. Following Cai et al.

(2023); Ma et al. (2023), we propose a new splitting rule called the max-edge partition rule.

This rule is amenable to theoretical analysis and can also achieve satisfactory practical

performance. In the non-interactive setting, the private data set is unavailable during the

partition process and the partition is created solely on the public data. Given public data

set {(X

Q

i

, Y

Q

i

)}

n

Q

i=1

, the partition rule is stated as follows:

• Let A

1

(0)

:= [0, 1]

d

be the initial rectangular cell and π

0

:= {A

j

(0)

}

j∈I

0

be the initialized

cell partition. I

0

= {1} stands for the initialized index set. In addition, let p ∈ N

represent the maximum depth of the tree. The parameter is fixed beforehand by the

user and possibly depends on n.

• Suppose we have obtained a partition π

i−1

of X after i −1 steps of the recursion. Let

π

i

= ∅. In the i-th step, let A

j

(i−1)

∈ π

i−1

be ×

d

`=1

[a

`

, b

`

] for j ∈ I

i−1

. We choose the

edge to be split among the longest edges. The index set of longest edges is defined as

M

j

(i−1)

=

k | |b

k

− a

k

| = max

`=1,··· ,d

|b

`

− a

`

|, k = 1, ··· , d

.

• Assume we split along the `-th dimension for ` ∈ M

j

(i−1)

, A

j

(i−1)

is then parti-

tioned into a left sub-cell A

j,0

(i−1)

(`) and a right sub-cell A

j,1

(i−1)

(`) along the midpoint

of the chosen dimension, where A

j,0

(i−1)

(`) =

n

x | x ∈ A

j

(i−1)

, x

`

< (a

`

+ b

`

)/2

o

and

7

Ma and Yang

Algorithm 1: Max-edge Partition

Input: Public data D

Q

, depth p.

Initialization: π

0

= [0, 1]

d

.

for i = 1 to p do

π

i

= ∅

for j in I

i−1

do

Select ` as in (2).

π

i

= π

i

∪ {A

j,0

(i−1)

(`), A

j,1

(i−1)

(`)}.

end

end

Output: Parition π

p

A

j,1

(i−1)

(`) = A

j

(i−1)

/A

j,0

(i−1)

(`). Then the dimension to be split is chosen by

min arg min

`∈M

j

(i−1)

score

A

j,0

(i−1)

(`), A

j,1

(i−1)

(`), D

Q

, (2)

where the score(·) function can be criteria for decision tree classifiers such as the Gini

index or information gain. The min operator implies that if the arg min function

returns multiple indices, we select the smallest index. Thus, the partition process is

feasible even if there is no public data, where all axes have the same score.

• Once ` is selected, let π

i

= π

i

∪ {A

j,0

(i−1)

(`), A

j,1

(i−1)

(`))}.

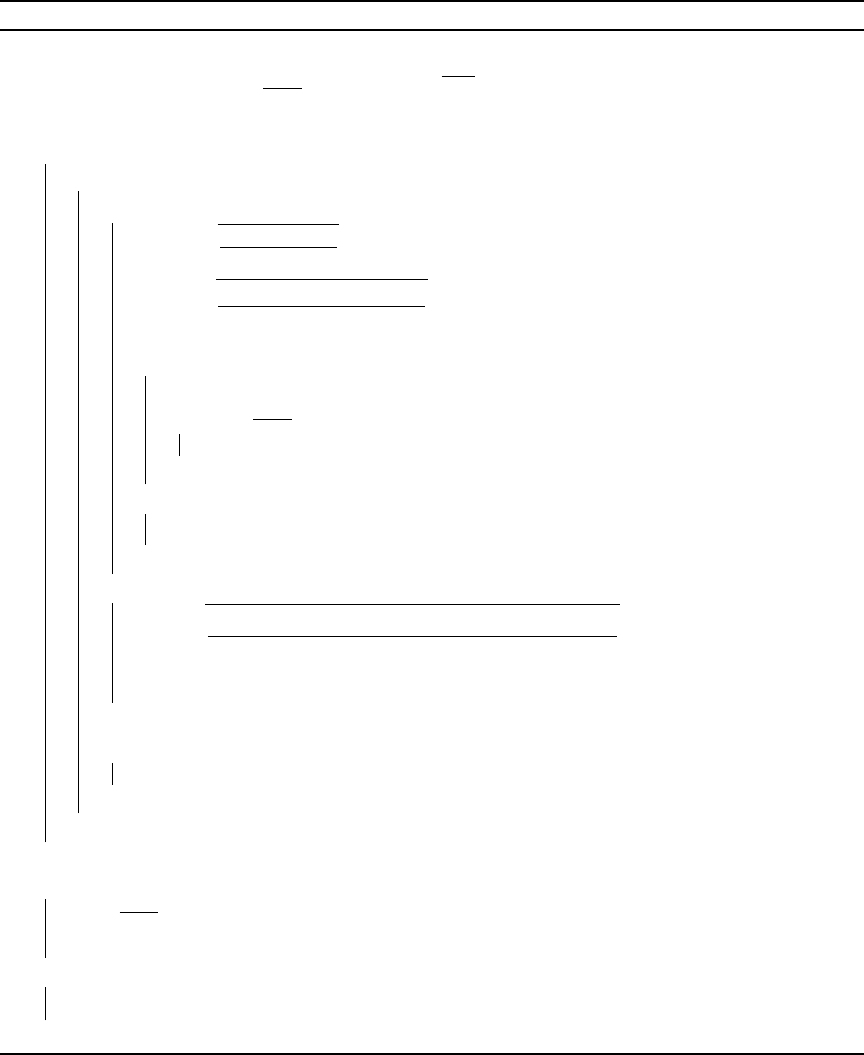

The complete process is presented in Algorithm 1 and illustrated in Figure 1. For each grid,

the partition rule selects the midpoint of the longest edges that achieves the largest score

reduction. This procedure continues until either node has no samples or the depth of the

tree reaches its limit. We also define relationships between nodes which are necessary in

Section 5. For node A

j

(i)

, we define its parent node as

parent(A

j

(i)

) := A

j

0

(i−1)

s.t. A

j

0

(i−1)

∈ π

i−1

, A

j

(i)

⊂ A

j

0

(i−1)

.

For i

0

< i, the ancestor of a node A

j

(i)

with depth i

0

is then defined as

ancestor(A

j

(i)

, i

0

) = parent

i−i

0

(A

j

(i)

) = parent ◦ ··· ◦ parent

| {z }

i−i

0

(A

j

(i)

),

which is the grid in π

i

0

that contains A

j

(i)

.

3.3 Privacy Mechanism for Any Partition

This section focuses on the privacy mechanism based on general tree partitions. We first

introduce the necessary definitions and then present our private estimation based on the

Laplacian mechanism in (8). To avoid confusion, we refer the readers to a clear summariza-

tion of the defined estimators in Appendix A.1.

8

Optimal Locally Private Nonparametric Cla ssification with Public Data

A

1

(0)

A

1

(1)

A

2

(1)

A

2

(2)

A

1

(2)

A

1

(3)

A

2

(3)

A

3

(3)

A

4

(3)

Ancestor

Ancestor/Parent

π

0

π

1

π

2

π

3

Figure 1: Partition created by the max-edge rule. The areas filled with orange represent the corresponding

A

j

(i)

and the blue lines represent the partition boundaries after each level of partitioning. Red

boxes contain an ancestor with depth 2, which is also a parent. Blue boxes contain an ancestor

with depth 1.

For index set I, let π = π

p

= {A

j

(p)

}

j∈I

p

be the tree partition created by Algorithm 1.

A population classification tree estimation is defined as

η

π

P

(x) =

X

j∈I

p

1{x ∈ A

j

(p)

}

R

A

j

(p)

η

∗

P

(x

0

) dP

X

(x

0

)

R

A

j

(p)

dP

X

(x

0

)

(3)

The label is inferred using the population tree classifier which is defined as f

π

P

(x) =

1 (η

π

P

(x) > 1/2). Here, we let 0/0 = 0 by definition.

To get an empirical estimator given the data set D = {(X

P

1

, Y

P

1

), . . . , (X

P

n

P

, Y

P

n

P

)}, we

estimate the numerator and the denominator of (3) separately. To estimate the denomi-

nator, each sample (X

P

i

, Y

P

i

) contributes a one-hot vector U

P

i

∈ {0, 1}

|I

p

|

where the j-th

element of U

P

i

is 1{X

P

i

∈ A

j

(p)

}. Then an estimation of

R

A

j

(p)

dP

X

(x

0

) is

1

n

P

P

n

P

i=1

U

Pj

i

, which

is the number of samples in A

j

(p)

divided by n

P

. Analogously, let V

P

i

= Y

i

· U

P

i

∈ {0, 1}

|I

p

|

.

An estimation of

R

A

j

(p)

η

∗

P

(x

0

)dP

X

(x

0

) is

1

n

P

P

n

P

i=1

V

Pj

i

, which is the sum of the labels in A

j

(p)

divided by n

P

. Combining the pieces, a classification tree estimation is defined as

bη

π

P

(x) =

X

j∈I

p

1{x ∈ A

j

(p)

}

P

n

P

i=1

V

Pj

i

P

n

P

i=1

U

Pj

i

. (4)

The corresponding classifer is defined as

b

f

π

P

(x) = 1 (bη

π

P

(x) > 1/2). In other words, bη

π

P

(x)

estimates η

∗

P

(x) by the average of the responses in the cell. In the non-private setting, each

data holder prepares U

P

i

and V

P

i

according to the partition π and sends it to the curator.

Then the curator aggregates the transmission following (4).

To protect the privacy of each data, we propose to estimate the numerator and denomi-

nator of the population regression tree using a privatized method. Specifically, U

P

i

and V

P

i

are combined with the Laplace mechanism (Dwork et al., 2006) before being sent to the

9

Ma and Yang

curator. For i.i.d. standard Laplace random variables ζ

j

i

and ξ

j

i

, let

˜

U

P

i

:= U

P

i

+

4

ε

ζ

i

= U

P

i

+ (

4

ε

ζ

1

i

, ··· ,

4

ε

ζ

|I

p

|

i

). (5)

Then a privatized estimation of

R

A

j

(p)

dP

X

(x) is

1

n

P

P

n

P

i=1

˜

U

Pj

i

. Analogously, let

˜

V

P

i

:= V

P

i

+

4

ε

ξ

i

= V

P

i

+ (

4

ε

ξ

1

i

, ··· ,

4

ε

ξ

|I

p

|

i

). (6)

An estimation of

R

A

j

(p)

η

∗

P

(x)dP

X

(x) is

1

n

P

P

n

P

i=1

˜

V

Pj

i

. Then using the privatized information

(

˜

U

P

i

,

˜

V

P

i

), i = 1, ··· , n

P

, we can estimate the regression function as

˜η

π

P

(x) =

X

j∈I

p

1{x ∈ A

j

(p)

}

P

n

P

i=1

˜

V

Pj

i

P

n

P

i=1

˜

U

Pj

i

. (7)

The procedure is also derived by Berrett and Butucea (2019). As an alternative, one can

use random response (Warner, 1965) since both U and V are binary vectors. The following

proposition shows that the estimation procedures satisfy LDP.

Proposition 2 Let π = {A

j

}

j∈I

be any partition of X with ∪

j∈I

A

j

= X and A

i

∩A

j

= ∅,

i 6= j. Then the privacy mechanism defined in (5) and (6) is non-interactive ε-LDP.

In the case where one also has access to the Q-data in addition to the P-data, the

Q data can be used to help the classification task under the target distribution P and

should be taken into consideration. The utilization of P data must satisfy the local DP

constraint while the Q data can be arbitrarily adopted. We accommodate the existing

private estimation (7) and non-private estimation (4) by taking a weighted average based

on both estimators. Denote the encoded information from D

P

and D

Q

as (U

P

i

, V

P

i

) and

(U

Q

i

, V

Q

i

), respectively. We use the same partition for P estimator and Q estimator. Namely,

(4) and (7) are constructed via an identical π. When taking the average, data from the

different distributions should have different weights since the signal strengths are different

between P and Q. To make the classification at x ∈ X, the Locally differentially Private

Classification Tree estimation (LPCT) is defined as follows:

˜η

π

P,Q

(x) =

X

j∈I

p

1{x ∈ A

j

(p)

}

P

n

P

i=1

˜

V

Pj

i

+ λ

P

n

Q

i=1

V

Qj

i

P

n

P

i=1

˜

U

Pj

i

+ λ

P

n

Q

i=1

U

Qj

i

. (8)

Here, we let λ ≥ 0. The locally private tree classifier is

˜

f

π

P,Q

(x) = 1(˜η

π

P,Q

(x) > 1/2). In each

leaf node A

j

(p)

, the classifier is assigned a label based on the weighted average of estimations

from P and Q data. The parameter λ serves to balance the relative contributions of both P

and Q. A higher value of λ indicates that the final predictions are predominantly influenced

by the public data, and vice versa.

The estimator defined in (8) easily adapts to solve the non-private transfer learning

problem in Cai and Wei (2021) if we define

bη

π

P,Q

(x) =

X

j∈I

p

1{x ∈ A

j

(p)

}

P

n

P

i=1

V

Pj

i

+ λ

P

n

Q

i=1

V

Qj

i

P

n

P

i=1

U

Pj

i

+ λ

P

n

Q

i=1

U

Qj

i

. (9)

10

Optimal Locally Private Nonparametric Cla ssification with Public Data

Similar to the nearest neighbor estimator in Cai and Wei (2021), (9) estimates by taking

weighted averages of labels from nearby samples. Our theoretical results in Section 4.2

imply that (9) is also rate optimal in terms of rate for non-private transfer learning. A key

advantage for incorporating (9) is that, given the partition, it stores only the prediction

values at each node, which makes it convenient to inject noise. Conversely, the nearest

neighbor estimator (similarly, the kernel estimator) requires a query to the entire training

data set for each single testing sample, which prohibits any modification towards a private

estimator. See Kroll (2021) for an example of such limitations.

4. Theoretical Results

In this section, we present the obtained theoretical results. We present the matching lower

and upper bounds of excess risk in Section 4.1 and 4.2. Finally, we demonstrate that LPCT

is free from the unknown range parameter in Section 4.3. All technical proofs can be found

in Appendix B.

4.1 Mini-max Convergence Rate

We first give the necessary assumptions on the distribution P and Q. Then we provide the

mini-max rate under the assumptions.

Assumption 1 Let α ∈ (0, 1]. Assume the regression function η

∗

P

: X → R is α-H¨older

continuous, i.e. there exists a constant c

L

> 0 such that for all x

1

, x

2

∈ X, |η

∗

P

(x

1

) −

η

∗

P

(x

2

)| ≤ c

L

kx

1

− x

2

k

α

. Also, assume that the marginal density functions of P and Q are

upper and lower bounded, i.e. c ≤ dP

X

(x) ≤ c for some c, c > 0.

Assumption 2 (Margin Assumption) Let β ≥ 0, C

β

> 0. Assume

P

X

η

∗

P

(X) −

1

2

< t

≤ C

β

t

β

.

Assumption 3 (Relative Signal Exponent) Let the relative signal exponent γ ∈ (0, ∞)

and C

γ

∈ (0, ∞). Assume that

η

∗

Q

(x) −

1

2

η

∗

P

(x) −

1

2

≥ 0, and

η

∗

Q

(x) −

1

2

≥ C

γ

η

∗

P

(x) −

1

2

γ

.

Assumption 1 and 2 are standard conditions that have been widely used for non-

parametric classification problems (Audibert and Tsybakov, 2007; Samworth, 2012; Chaud-

huri and Dasgupta, 2014). Assumption 3 depicts the similarity between the conditional

distribution of public data and private data. When γ is small, the signal strength of Q

is strong compared to P. In the extreme instance of γ = 0, η

∗

Q

(x) consistently remains

distant from 1/2 by a constant. In contrast, when γ is large, there is not much information

contained in Q. The special case of γ = ∞ allows η

∗

Q

(x) to always be 1/2 and is thus non-

informative. We present the following theorem that specifies the mini-max lower bound

with LDP constraints on P data under the above assumptions. The proof is based on first

constructing two function classes and then applying the information inequalities for local

privacy (Duchi et al., 2018) and Assouad’s Lemma (Tsybakov, 2009).

11

Ma and Yang

Theorem 3 Denote the function class of (P, Q) satisfying Assumption 1, 2, and 3 by F.

Then for any estimator

b

f that is sequentially-interactive ε-LDP, there holds

inf

b

f

sup

F

E

X,Y

h

R

P

(

b

f) − R

∗

P

i

&

n

P

(e

ε

− 1)

2

+ n

2α+2d

2γα+d

Q

−

α(β+1)

2α+2d

. (10)

If n

Q

= 0, our results reduce to the special case of LDP non-parametric classifica-

tion with only private data. In this case, the mini-max lower bound is of the order

(n

P

(e

ε

− 1)

2

)

−

α(β+1)

2α+2d

. Previously, Berrett and Butucea (2019) provided an estimator that

reaches the mini-max optimal convergence rate for small ε. If n

P

= 0, the result recovers the

learning rate n

−

α(β+1)

2γα+d

Q

established for transfer learning under posterior drift (Cai and Wei,

2021). In this case, we have only source data from Q and want to generalize the classifier

on the target distribution P.

The mini-max convergence rate (10) is the same as if one fits an estimator using only

P data with sample size n

P

+ (e

ε

−1)

−2

·n

2α+2d

2γα+d

Q

. The contribution of Q data is substantial

when n

P

(e

ε

− 1)

−2

· n

2α+2d

2γα+d

Q

. Otherwise, the convergence rate is not significantly better

than using P data only. The quantity (e

ε

− 1)

−2

· n

2α+2d

2γα+d

Q

, which is referred to as effective

sample size, represent the theoretical gain of using Q data with sample size n

Q

under given

ε, α, d, and γ. Notably, the smaller γ is, the more effective the Q data becomes, and the

greater gain is acquired by using Q data. Regarding different values of γ, our work covers

the setting in previous work when a small amount of effective public samples are required

(Bie et al., 2022; Ben-David et al., 2023), and when a large amount of noisy/irrelevant public

samples are required (Ganesh et al., 2023; Yu et al., 2023). Also, the public data is more

effective for small ε, i.e. under stronger privacy constraints. Intuitively, in the presence

of stringent privacy constraints, the utilization of Q data is as effective as incorporating

a substantial amount of P data. Furthermore, even for a constant ε, our effective sample

size significantly exceeds n

2α+d

2γα+d

Q

as in the non-private scenario (Cai and Wei, 2021). This

suggests that, in comparison to transfer learning in the non-private setting, public data

assumes greater value in the private setting.

4.2 Convergence Rate of LPCT

In the following, we show the convergence rate of LPCT reaches the lower bound with

proper parameters. The proof is based on a carefully designed decomposition in Appendix

B.3. Define the quantity

δ :=

n

P

ε

2

log(n

P

+ n

Q

)

+

n

Q

log(n

P

+ n

Q

)

2α+2d

2γα+d

!

−

d

2α+2d

. (11)

Theorem 4 Let π be constructed through the max-edge partition in Section 3.2 with depth

p. Let

˜

f

π

P,Q

be the locally private two-sample tree classifier. Suppose Assumption 1, 2, and

12

Optimal Locally Private Nonparametric Cla ssification with Public Data

3 hold. Then, for n

−

α

2α+2d

P

. ε . n

d

4α+2d

P

, if λ δ

(γα−α)∧(2γα−d)

d

and 2

p

δ

−1

, there holds

R

P

(

˜

f

π

P,Q

) − R

∗

P

. δ

α(β+1)

d

=

n

P

ε

2

log(n

P

+ n

Q

)

+

n

Q

log(n

P

+ n

Q

)

2α+2d

2γα+d

!

−

α(β+1)

2α+2d

(12)

with probability 1 − 3/(n

P

+ n

Q

)

2

with respect to P

n

P

⊗ Q

n

Q

⊗ R where R is the joint

distribution of privacy mechanisms in (5) and (6).

Compared to Theorem 3, the LPCT with the best parameter choice reaches the mini-

max lower bound up to a logarithm factor. Both parameters λ and p should be assigned

a value of the correct order to achieve the optimal trade-off. The depth p increases when

either n

P

or n

Q

grows. This is intuitively correct since the depth of a decision tree should

increase with a larger number of samples. The strength ratio λ, on the other hand, is closely

related to the value of γ. As explained earlier, a small γ guarantees a stronger signal in

η

∗

Q

. Thus, the value of λ is decreasing for γ. In this case, when the signal strength of η

∗

Q

is

large, we rely more on the estimation by Q data by assigning a larger ratio to it.

We note that when ε is large, there is a gap between (e

ε

− 1)

2

in the lower bound and

ε

2

in the upper bound. The problem can be mitigated by employing the random response

mechanism (Warner, 1965) instead of the Laplace mechanism, as mentioned in Section

3.3. The theoretical analysis generalizes analogously. Here, we do not address this issue

as we want to focus on public data. The theorem restricts n

−

α

2α+2d

P

. ε . n

d

4α+2d

P

. When

ε . n

−

α

2α+2d

P

, the estimator is drastically perturbed by the random noise and the convergence

rate is no longer optimal. As ε gets larger, the upper bound of excess risk decreases until

ε n

d

4α+2d

P

. When ε & n

d

2α+2d

P

, the random noise is negligible and the convergence rate

remains to be

n

P

log(n

P

+ n

Q

)

+

n

Q

log(n

P

+ n

Q

)

2α+d

2γα+d

!

−

α(β+1)

2α+d

,

which recovers the rate of non-private learning as established in Cai and Wei (2021).

We discuss the order of ratio λ. When γ ≥

d

α

− 1, we have λ δ

(γ−1)α

d

. This rate

is exactly the same as in the conventional transfer learning case (Cai and Wei, 2021) and

is enough to achieve the optimal trade-off. Yet, under privacy constraints, this choice

of λ will fail when γ <

d

α

− 1. Roughly speaking, if we let λ δ

(γ−1)α

d

in this case,

there is a probability such that the noise

4

ε

P

n

P

i=1

ζ

j

i

in (8) will dominate the non-private

density estimation

P

n

P

i=1

U

Pj

i

+ λ

P

n

Q

i=1

U

Qj

i

. When the domination happens, the point-wise

estimation is decided by random noises, and thus the overall excess risk fails to converge.

To alleviate this issue, we assign a larger value λ δ

2γα−d

d

> δ

(γ−1)α

d

to ensure

4

ε

P

n

P

i=1

ζ

j

i

.

P

n

P

i=1

U

Pj

i

+ λ

P

n

Q

i=1

U

Qj

i

. As an interpretation, in the private setting, we place greater

reliance on the estimation by public data compared to the non-private setting.

Last, we discuss the computational complexity of LPCT. We first consider the average

computation complexity of LPCT. The training stage consists of two parts. Similar to the

13

Ma and Yang

standard decision tree algorithm (Breiman, 1984), the partition procedure takes O(pn

Q

d)

time. The computation of (8) takes O(p(n

P

+ n

Q

)) time. From the proof of Theorem 4,

we know that 2

p

δ

−1

, where δ

−1

. n

P

+ n

Q

. Thus the training stage complexity is

around O((n

P

+ n

Q

d) log(n

P

+ n

Q

)) which is linear. Since each prediction of the decision

tree takes O(p) time, the test time for each test instance is no more than O(log(n

P

+ n

Q

)).

As for storage complexity, since LPCT only requires the storage of the tree structure and

the prediction value at each node, the space complexity of LPCT is O(δ

−1

) which is also

sub-linear. In short, LPCT is an efficient method in time and memory.

4.3 Eliminating the Range Parameter

We discuss how public data can eliminate the range parameter contained in the convergence

rate. When X is unknown, one must select a predefined range [−r, r]

d

for creating partitions.

Only then do the data holders have a reference for encoding their information. In this case,

the convergence rate of Berrett and Butucea (2019) becomes (r

2d

/(n

P

ε

2

))

(1+β)α

2α+2d

∨ (1 −

R

[−r,r]

d

dP(x)), which is slower than the known X = [0, 1]

d

for any r. A small r means that

a large part of the informative X will be ignored (the second term is large), whereas a large

r may create too many redundant cells (the first term is large). See Gy¨orfi and Kroll (2022)

for detailed derivation. Without much prior knowledge, this parameter can be hard to tune.

The removal of the range parameter is first discussed in Bie et al. (2022), where d+1 public

data is required. Our result shows that we do not need to tune this range parameter with

the help of public data.

Theorem 5 Suppose the domain X = ×

d

j=1

[a

j

, b

j

] is unknown. Let

b

a

j

= min

i

X

j

i

and

b

b

j

=

max

i

X

j

i

. Define the min-max scaling map M : R

d

→ R

d

where M(x)

j

= (x

j

−

b

a

j

)/(

b

b

j

−

b

a

j

).

The the min-max scaled D

P

and D

Q

is written as M(D

P

) and M(D

Q

). Then for

˜

f

π

P,Q

trained within [0, 1]

d

on M(D

P

) and M(D

Q

) as in Theorem 4, we have

R

P

(

˜

f

π

P,Q

· 1

[0,1]

d

) ◦ M

− R

∗

P

. δ

α(β+1)

d

+

log n

Q

n

Q

with probability 1 −3/(n

P

+ n

Q

)

2

− d/n

2

Q

with respect to P

n

P

⊗ Q

n

Q

⊗ R.

Compared to Theorem 4, the upper bound of Theorem 5 increases by log n

Q

/n

Q

, which

is a minor term in most cases. Moreover, Theorem 5 fails with additional probability d/n

2

Q

since we are estimating [a

j

, b

j

] with [

b

a

j

,

b

b

j

]. Both changes indicate that sufficient public

data is required to resolve the unknown range issue.

5. Data-driven Tree Structure Pruning

In this section, we develop a data-driven approach for parameter tuning and investigate its

theoretical properties. In Section 5.1, we derive a selection scheme in the spirit of Lepski’s

method (Lepskii, 1992), a classical method for adaptively choosing the hyperparameters.

In Section 5.2, we make slight modifications to the scheme and illustrate the effectiveness

of the amended approach.

14

Optimal Locally Private Nonparametric Cla ssification with Public Data

A

1

(0)

A

1

(1)

A

2

(2)

A

1

(2)

A

1

(3)

A

2

(3)

A

3

(3)

A

4

(3)

(a) Initial estimation

A

1

(0)

A

1

(1)

A

2

(2)

A

1

(2)

A

1

(3)

A

2

(3)

A

3

(3)

A

4

(3)

(b) During pruning

A

1

(0)

A

1

(1)

A

2

(2)

A

1

(2)

A

1

(3)

A

2

(3)

A

3

(3)

A

4

(3)

(c) Pruned estimation

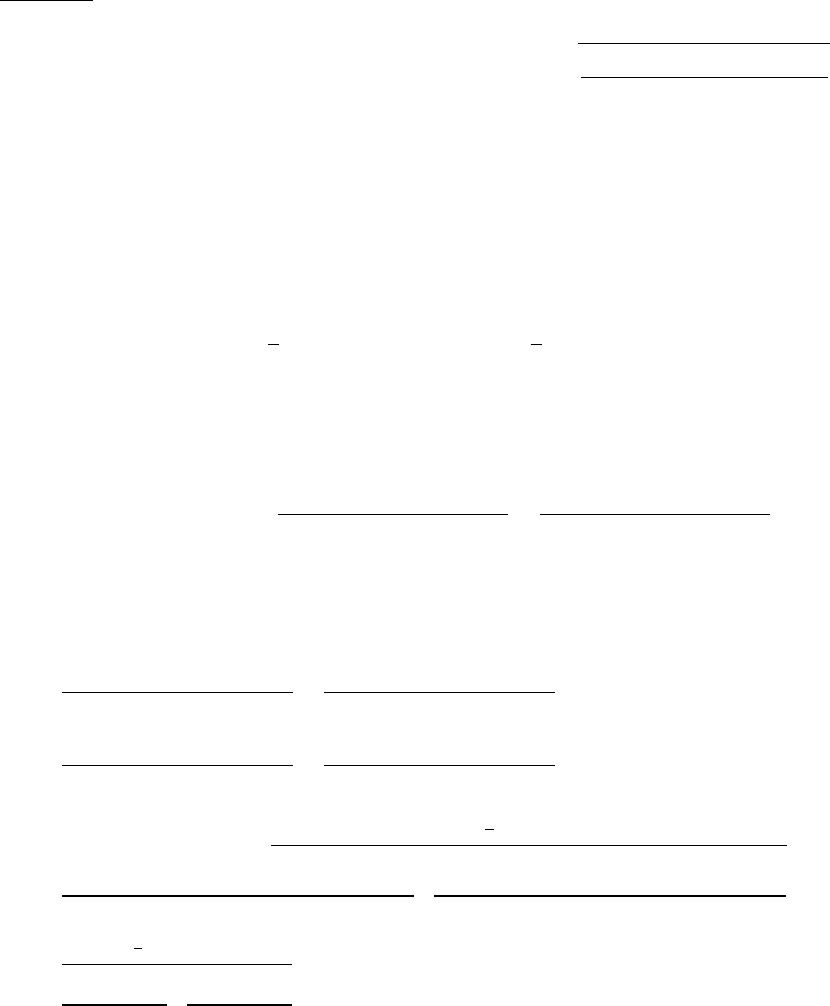

Figure 2: Illustration of pruning process. The yellow area filled at node A

j

(i)

means that for x ∈ A

j

(i)

, we use

the average of labels in the yellow area instead of A

j

(i)

itself. The prediction in A

1

(3)

is pruned to

its depth - 1 ancestor; the prediction in A

3

(3)

is pruned to its depth - 2 ancestor; the prediction in

A

2

(3)

is pruned to its depth - 3 ancestor, i.e. not pruned.

5.1 A Naive Strategy

We first develop a private analog of Lepski’s method (Lepskii, 1992) for LPCT. There are

two parameters: the depth p and the relative ratio λ, both of which have an optimal value

depending on the unknown α and γ. We do not set the parameters to specific values.

Instead, we first query the privatized information with a sufficient tree depth p

0

. Then, we

introduce a greedy procedure to locally prune the tree to an appropriate depth and select

λ based on local information.

We provide a preliminary result that will guide the derivation of the pruning procedure.

For max-edge partition π

p

0

with depth p

0

and node A

j

(p

0

)

, the depth k ancestor private tree

estimation is defined as

˜η

π

k

P,Q

(x) =

X

j∈I

p

0

1{x ∈ A

j

(p

0

)

}

P

ancestor(A

j

0

(p

0

)

,k)=ancestor(A

j

(p

0

)

,k)

P

n

P

i=1

˜

V

Pj

0

i

+ λ

P

n

Q

i=1

V

Qj

0

i

P

ancestor(A

j

0

(p

0

)

,k)=ancestor(A

j

(p

0

)

,k)

P

n

P

i=1

˜

U

Pj

0

i

+ λ

P

n

Q

i=1

U

Qj

0

i

(13)

and consequently the classifier is defined as

˜

f

π

k

P,Q

(x) = 1(˜η

π

k

P,Q

(x) > 1/2). See Figure 2(a)

for an illustration of estimation at ancestor nodes.

Proposition 6 Let π be constructed through the max-edge partition in Section 3.2 with

depth p

0

. Let ˜η

π

k

P,Q

be the tree estimator with depth k ancestor node in (13). Let bη

π

k

P,Q

be the

non-private tree estimator with partition π

k

in (9). Define the quantity

r

j

k

:=

v

u

u

u

u

t

4C

1

(2

p

0

−k+3

ε

−2

n

P

) ∨

P

j

0

P

n

P

i=1

˜

U

Pj

0

i

+ C

1

λ

2

P

j

0

P

n

P

i=1

U

Qj

0

i

P

j

0

P

n

P

i=1

˜

U

Pj

i

+ λ

P

j

0

P

n

Q

i=1

U

Qj

i

2

(14)

where the summation over j

0

is with respect to all descendent nodes of the depth-k ancestor,

i.e. ancestor(A

j

0

(p

0

)

, k) = ancestor(A

j

(p

0

)

, k). The constant C

1

is specified in the proof. Then,

15

Ma and Yang

for all λ and p

0

, if x ∈ A

j

(p

0

)

, there holds

˜η

π

k

P,Q

(x) − E

Y |X

h

bη

π

k

P,Q

(x)

i

≤ r

j

k

with probability 1 − 3/(n

P

+ n

Q

)

2

with respect to P

n

P

Y

P

|X

P

⊗ Q

n

Q

Y

Q

|X

Q

⊗ R where R is the

joint distribution of privacy mechanisms in (5) and (6). E

Y |X

is taken with respect to

P

n

P

Y |X

⊗ Q

n

Q

Y |X

.

The above proposition indicates that if ˜η

π

k

P,Q

(x) − 1/2 ≥ r

j

k

, then there holds

E

Y |X

h

bη

π

k

P,Q

(x)

i

−

1

2

= E

Y |X

h

bη

π

k

P,Q

(x)

i

− ˜η

π

k

P,Q

(x) + ˜η

π

k

P,Q

(x) −

1

2

≥ 0

with probability 1 − 3/(n

P

+ n

Q

)

2

. Similar conclusion holds when ˜η

π

k

P,Q

(x) − 1/2 < r

j

k

. In

other words, when we have

|˜η

π

k

P,Q

(x) −

1

2

|

r

j

k

≥ 1, (15)

the population version of ancestor estimation is of the same sign as the sample version

estimation. As long as (15) holds, it is enough to use the sample version estimation, and

our goal is to find the best k for E

Y |X

[bη

π

k

P,Q

(x)] to approximate η

∗

P

(x). Under continuity

assumption 1, the approximation error is bounded by the radius of the largest cell (see

Lemma 15). Consequently, one can show that R

P

(1(E

Y |X

[bη

π

k

P,Q

(x)] > 1/2)) is monotonically

decreasing with respect to k. Thus, our goal is to find the largest k such that (15) holds.

On the other hand, (15) is dependent on λ. We select the best possible λ in order to let

(15) hold for each j and k, i.e.

λ

j

k

= arg max

λ

|˜η

π

k

P,Q

(x) − 1/2|

r

j

k

(16)

for x ∈ A

j

(p

0

)

. The optimization problem in (16) has a closed-form solution that can be

computed efficiently and explicitly. The derivation of the closed-form solution is postponed

to Section A.2.

Based on the above analysis, we can perform the following pruning procedure. We first

query with a sufficient depth, i.e. we select p

0

large enough, create a partition on D

Q

, and

receive the information from data holders. Then we prune back by the following procedure:

• For j ∈ I

p

0

, we do the following operation: for k = p

0

, ··· , 2 and r

j

k

defined in (14),

we assign λ

j

k

as in (16). Then if (15) holds, let k

j

= k; else, k ← k − 1.

• If k

j

is not assigned, we select k

j

= arg max

k

|˜η

π

k

P,Q

(x) − 1/2|/r

j

k

.

• Assign ˜η

prune

P,Q

(x) = ˜η

π

k

j

P,Q

(x) for all x ∈ A

j

(p

0

)

.

This process can be done efficiently. The exact process is illustrated in Figure 2. We first

calculate estimations as well as the relative signal strength at all nodes. Then we trace back

from each leaf node to the root and find the node that maximizes the statistic (15). The

prediction value at the leaf node is assigned as the prediction at the ancestor node with the

maximum statistic value. In total, the pruning causes additional time complexity at most

O(2

p

0

), which is ignorable compared to the original complexity as long as 2

p

0

. n

P

+ dn

Q

.

16

Optimal Locally Private Nonparametric Cla ssification with Public Data

5.2 Pruned LDP Classification Tree

In the non-private case, i.e. when ε = ∞, ˜η

prune

P,Q

is essentially the Lepski’s method (Lepskii,

1992; Cai and Wei, 2021). However, in the presence of privacy concerns, a tricky term

2

p

0

−k+3

ε

−2

n

P

arises in (14) due to the Laplace noises. Specifically, if 2

p

0

−k+3

ε

−2

n

P

≥

P

j

0

P

n

P

i=1

˜

U

Pj

0

i

, the first term in the numerator of (14) contains no information other than

the level of noise. As a result, the theoretical analysis of Lepski’s method fails to adapt.

To deal with the issue, we simultaneously perform two pruning procedures using informa-

tion of solely P or Q data. We first query with a large depth p

0

= b

d

2+2d

log

2

(n

P

ε

2

+n

2+2d

d

Q

)c

and half of the budget ε/2. When 2

p

0

−k+3

ε

−2

n

P

≥

P

j

0

P

n

P

i=1

˜

U

Pj

0

i

, we compare the values

of |˜η

π

k

P,Q

(x) − 1/2|/r

j

k

with λ = 0 and λ = ∞, associating to P and Q data, respectively.

If either of the statistics is larger than 1, we terminate the pruning. Else, we compare the

values of the statistics. If the statistic associated with Q is larger, we return the estimation

b

f

π

k

Q

. In this case, we guarantee that the pruned classifier is at least as good as the estimation

using only Q data. If the statistic associated with P is larger, we proceed the pruning to

k − 1. If we reach k ≤ b

d

2+2d

log

2

(n

P

ε

2

)c for any j, we terminate the whole algorithm and

return (7) with depth b

d

2+2d

log

2

(n

P

ε

2

)c, which costs another ε/2. In this case, we ensure

Q data is unimportant and adopt a terminating condition that is close to the optimal value

b

d

2α+2d

log

2

(n

P

ε

2

)c. The detailed algorithm is presented in Algorithm 2. Moreover, we show

that the adjusted pruning procedure will result in a near-optimal excess risk.

Theorem 7 Let

˜

f

prune

P,Q

= 1(˜η

prune

P,Q

≥ 1/2) be the pruned LPCT defined in Algorithm 2.

Suppose Assumption 1, 2, and 3 hold. Let δ be defined in (11). Then, for n

−

α

2α+2d

P

. ε .

n

d

4+2d

P

, with probability 1 − 4/(n

P

+ n

Q

)

2

with respect to P

n

P

⊗ Q

n

Q

⊗ R, we have

(i) if

n

P

ε

2

log(n

P

+n

Q

)

.

n

Q

log(n

P

+n

Q

)

2α+2d

2γα+d

, R

P

(

˜

f

prune

P,Q

) − R

∗

P

. δ

α(β+1)

d

;

(ii) if

n

P

ε

2

log(n

P

+n

Q

)

&

n

Q

log(n

P

+n

Q

)

2α+2d

2γα+d

, R

P

(

˜

f

prune

P,Q

) − R

∗

P

. δ

α(α+d)(β+1)

d(1+d)

.

The conclusion of Theorem 7 is twofold. On one hand, when Q data dominates P

data, i.e.

n

P

ε

2

log(n

P

+n

Q

)

. (

n

Q

log(n

P

+n

Q

)

)

2α+2d

2γα+d

, the pruned estimator remains rate-optimal. In

this case, the estimator is appropriately pruned to the correct depth. On the other hand,

when P data dominates Q data, the convergence rate suffers degradation. The degrada-

tion factor (α + d)/(1 + d) is mild, and even diminishes when α = 1, i.e. η

∗

P

is Lipschitz

continuous. In this case, there are two possible regions in which our conclusion is derived.

If (

n

Q

log(n

P

+n

Q

)

)

2α+2d

2γα+d

. (

n

P

ε

2

log(n

P

+n

Q

)

)

2α+2d

2+d

, the estimator triggers termination and is com-

puted with depth b

d

2+2d

log

2

(n

P

ε

2

)c. If the opposite, i.e.

n

P

ε

2

log(n

P

+n

Q

)

& (

n

Q

log(n

P

+n

Q

)

)

2α+2d

2γα+d

&

(

n

P

ε

2

log(n

P

+n

Q

)

)

2α+2d

2+d

, pruning fails to identify the optimal depth b

d

2α+2d

log

2

(n

P

ε

2

)c. Yet, it

also does not trigger termination at b

d

2+2d

log

2

(n

P

ε

2

)c. In this region, we take a shortcut

to avoid intricate analysis, while the true convergence rate is more complex yet faster than

that stated in Theorem 7. Notably, one drawback of this shortcut is the discontinuity of

the upper bound at the boundary

n

P

ε

2

log(n

P

+n

Q

)

(

n

Q

log(n

P

+n

Q

)

)

2α+2d

2γα+d

.

17

Ma and Yang

Algorithm 2: Pruned LPCT

Input: Private data D

P

= {(X

P

i

, Y

P

i

)}

n

P

i=1

, public data D

Q

= {(X

Q

i

, Y

Q

i

)}

n

Q

i=1

.

Initialization: p

0

= b

d

2+2d

log

2

(n

P

ε

2

+ n

2+2d

d

Q

)c, flag = 0.

Create π with depth p

0

on D

Q

. Query (5) and (6) with ε/2.

for A

j

(p

0

)

in π do

for k = p

0

, ···, 1 do

if 2

p

0

−k+3

ε

−2

n

P

≥

P

j

0

P

n

P

i=1

˜

U

Pj

0

i

then

r

Qj

k

=

r

4 log(n

P

+n

Q

)

P

j

0

P

n

P

i=1

U

Qj

0

i

, v

Qj

k

= |bη

π

k

Q

− 1/2|/r

Qj

k

r

Pj

k

=

s

2

p

0

−k+5

ε

−2

n

P

log(n

P

+n

Q

)

P

j

0

P

n

P

i=1

˜

U

Pj

0

i

2

, v

Pj

k

= |˜η

π

k

P

− 1/2|/r

Pj

k

if v

Qj

k

≤ v

Pj

k

then

s

j

k

= ˜η

π

k

P

, r

j

k

= r

Pj

k

, v

j

k

= v

Pj

k

if k ≤ b

d

2+2d

log

2

(n

P

ε

2

)c then

k

j

= k, flag = 1, break

end

else

s

j

k

= bη

π

k

Q

, r

j

k

= r

Qj

k

, v

j

k

= v

Qj

k

end

else

r

j

k

=

s

32

P

j

0

P

n

P

i=1

˜

U

Pj

0

i

+4λ

2

P

j

0

P

n

P

i=1

U

Qj

0

i

log(n

P

+n

Q

)

P

j

0

P

n

P

i=1

˜

U

Pj

i

+λ

P

j

0

P

n

Q

i=1

U

Qj

0

i

2

v

j

k

= max

λ

|˜η

π

k

P,Q

− 1/2|/r

j

k

, s

j

k

= ˜η

π

k

P,Q

with λ = λ

j

k

in (16)

end

if v

j

k

≥ 1 then

k

j

= k, break

end

end

end

if flag then

p = b

d

2+2d

log

2

(n

P

ε

2

)c. Create π

p

on D

Q

. Query (5) and (6) with ε/2.

Output: ˜η

prune

P,Q

= ˜η

π

p

P

.

else

Output: ˜η

prune

P,Q

=

P

j

s

j

k

· 1{A

j

(p

0

)

}.

end

18

Optimal Locally Private Nonparametric Cla ssification with Public Data

Algorithm 2 lacks true adaptiveness, given that its upper bound suffers a significant

loss factor of (α + d)/(1 + d). Conventional adaptive methods typically incur a loss factor

of logarithmic order (Butucea et al., 2020; Cai and Wei, 2021). Following the approach

outlined in Butucea et al. (2020), one potential avenue for improvement involves fitting

multiple trees for p = b

d

2+2d

log

2

n

P

ε

2

c, ··· , b

d

2+2d

log

2

(n

P

ε

2

+ n

2+2d

d

Q

)c and designing a

test statistic to zero out the suboptimal candidates.

In summary, this proposed scheme offers benefits from two perspectives: (1) It avoids

multiple queries to the data. All data holders send their privatized messages at most twice.

(2) It selects sensitive parameters p and λ in a data-driven manner. In sacrifice, the bound

of excess risk is reasonably loosened compared to the model with the best parameters, and

one more query to the private data is required.

6. Experiments on Synthetic Data

We validate our theoretical findings by comparing LPCT with several of its variants on

synthetic data in this section. All experiments are conducted on a machine with 72-core

Intel Xeon 2.60GHz and 128GB memory. Reproducible codes are available on GitHub

1

.

6.1 Simulation Design

We motivate the design of simulation settings. While conducting experiments across various

combinations of γ, n

P

, and n

Q

, our primary focus is on two categories of data: (1) both n

Q

and γ are small, i.e. we have a small amount of high-quality public data (2) both n

Q

and γ

are large, i.e. we have a large amount of low-quality public data. When γ is small but n

Q

is large, using solely public data yields sufficient performance, rendering private estimation

less meaningful. Conversely, when γ is large but n

Q

is small, incorporating public data into

the estimation offers limited assistance. Moreover, we require n

P

to be much larger than

n

Q

since practical data collection becomes much easier with a privacy guarantee.

We consider the following pair of distributions P and Q. The marginal distributions

P

X

= Q

X

are both uniform distributions on X = [0, 1]

2

. The regression function of P is

η

P

(x) =

1

2

+ sign(x

1

−

1

3

) · sign(x

2

−

1

3

) ·

(3x

1

− 1)(3x

2

− 1)

10

· max

0, 1 −

2x

2

− 1

1

10

while the regression function of Q with parameter γ is

η

Q

(x) =

1

2

+

2

5

·

5

2

·

η

P

(x) −

1

2

γ

.

It can be easily verified that the constructed distributions above satisfy Assumption 1, 2,

and 3. Throughout the following experiments, we take γ in 0.5 and 5. For better illustration,

their regression functions are plotted in Figure 3.

We conduct experiments with privacy budgets ε ∈ {0.5, 1, 2, 4, 8, 1000}. Here, ε = 1000

can be regarded as the non-private case which marks the performance limitation of our

methods. The other budget values cover all commonly seen magnitudes of privacy budgets.

1. https://github.com/Karlmyh/LPCT.

19

Ma and Yang

(a) η

P

(b) η

Q

with γ = 0.5 (c) η

Q

with γ = 5

Figure 3: Contour plots of the simulation distributions.

We take classification accuracy as the evaluation metric. In the simulation studies, the

comparison methods and their abbreviation are as follows:

• LPCT is the proposed algorithm with the max-edge partition rule. We select the

parameters among p ∈ {1, ··· , 8} and λ ∈ {0.1 , 0.5, 1, 2, 5, 10, 50, 100, 200, 300, 400,

500, 750, 1000, 1250, 1500, 2000}. We choose the Gini index as the reduction criterion.

• LPCT-P and LPCT-Q are the estimation associated to P and Q data, respectively.

Their parameter grids are the same as LPCT.

• LPCT-R is the proposed algorithm using the random max-edge partition (Cai et al.,

2023). Specifically, we randomly select an edge among the longest edges to cut at the

midpoint. Its parameter grids are the same as LPCT.

• LPCT-prune is the pruned locally private classification tree in Algorithm 2. We use

constants that yield a tighter bound than (14).

For each model, we report the best result over its parameter grids, with the best result

determined based on the average result of at least 100 replications.

6.2 Simulation Results

In Section 6.2.1 and 6.2.2, we analyze the influence of underlying parameters and sample

sizes to validate the theoretical findings. In Section 6.2.3, we analyze the parameters of

LPCT to understand their behaviors. We illustrate various ways that public data benefits